What Is a Test Case in Software Testing?

Test case is a broad term used to describe a project or event examining a past outcome. A test case is a set of documented actions — the how-to steps — that govern the observation and analysis of a specific requirement in the practice and profession of software testing.

The following discussion will cover test cases only in the context described above. For more information on the full spectrum of concepts associated with test cases and software testing principles and practices, including specific levels of software testing (unit, smoke, regression, integration, system, acceptance, and more), the different testing methods (black-box, white-box, and nonfunctional testing), and the dynamics of manual testing and software test automation, read this article on automated software testing.

The test case is an indispensable component of the software development lifecycle (SDLC). Software testers typically write, perform, and document the SDLC as part of the quality assurance (QA) process. By executing specified actions, testers can find problems with software and identify defects (or bugs).

Test case documentation describes to others how the testers reached the result of each trial. This documentation validates the testing activity and lists the components that contribute to a conclusion. The set of conditions in the test case defines the outcome of the test. By executing the actions specified by a test case, the team can determine whether a unit of software code, the features of an application under test (AUT), or the overall software behind a system under test (SUT) satisfies the expected behavior.

The Standard Test Case Format

Whether you use a word processing document, a spreadsheet, or a test management tool, format the test case to address the steps of a single executable test for an individual requirement. Test case documentation is not designed to be a database log of past results, so it is not formatted to show previous results.

A test case addresses what to test, the expected results, and the steps involved in determining an independent pass/fail outcome. In general, test cases include the following test criteria fields:

-

Test Case Statement: This statement, or test description, provides information on what the test seeks to confirm (i.e., the requirement being tested), the tools used to perform the test, the conditions (environment) of the test, and information on alternative testing techniques, if applicable. (Examples of alternative testing techniques include equivalence partitioning, error guessing, and transition state testing.) Always keep the end user in mind when writing the test case statement.

-

Test Setup: These are the prerequisites (or preconditions) for the test. They include a test review, a test approval, a test execution plan, test proofs, a test reporting process, appropriate use cases, and the risks pertinent to the test requirement. Test case dependencies include info on the version of the AUT, the relevant data files, the type of operating system and hardware, the browser details, security clearance, the date, and the time of day.

-

Test Case ID: The test case must be identifiable and traceable back to the bug or defect logged as a result of the test. This method helps correctly identify and document each test case and the known errors, and it helps avoid redundant testing.

-

Test Scenario: This includes information about a test in the form of specific, detailed objectives that help the tester perform a more accurate analysis. It does not, however, include particular steps or sequences. The test scenario addresses procedures that might overlap with multiple test cases.

-

Test Steps: Test steps are transparent; they tell a tester how to execute the test in simple and clear terms. They also let the tester know the proper sequence in which to execute the steps. Use active verbs to describe specific actions, so people can quickly understand the objective. These steps are atomic — in other words, there is a clear idea of what needs fixing when the test fails because the steps don’t overlap with more than one test requirement or subfunction.

-

Test Data: This section includes the variables, like account information, email addresses, or input values, necessary to execute a specific test step. Boundary analysis testing is a method of determining the parameters of a test data range. For example, a tester would try out a password tool with a minimum and maximum amount of character values.

-

Expected Results: This includes any detailed and precise information or data that a tester should expect to see and gather from a test.

-

Actual Results: Document all the positive and negative results that you receive from a test to help confirm or reject the expected results and detect any issues or bugs.

-

Pass/Fail Confirmation: This is the part of the process during which testers discuss and review the result to determine whether the test was a success or a failure.

-

Additional Comments: This section addresses all the other notes that the tester and the test generate, including time and name stamps.

Helpful Test Case Hacks to Avoid Common Issues

To avoid common problems associated with test cases, remember that software testing is subject to continuous change and revision. For example, a change in requirements impacts what gets tested. When a change in the elements of a test occurs, the tester needs to update the test case accordingly. Likewise, different levels of testing require different test case conditions; testers address these changing requirements by making the necessary revisions or creating new test cases. Here are some more tips to avoid common test case problems:

-

Simplicity: Test cases get distributed among development teams and business stakeholders. Some software development methodologies call for test cases to drive development, so a test case should not be complex or rely on moving from document to document to test scenarios. One should write a test case using accessible and clear language, while avoiding technical jargon. Describe manual test steps in detail and in sequential flow. Avoid digressions and complexity. If a test fails, it reflects one failed condition, not multiple failures in a sequence of steps.

-

Be Critical: Write test cases with the intent of uncovering information early and often. Be critical of the AUT when determining expected results, and do not rely on previous versions of an application to determine test behavior. When writing a test case, review it to confirm that others can execute it without instruction. Critique a test case scenario with the end user experience in mind to expose design or usability flaws. Seek peer reviews and open feedback for all test cases.

-

Organize: Use standardized templates if you work with a spreadsheet system to manage test cases. Create each test case on a separate sheet, so each sheet reflects a single flow of steps. Test case management software can automate and organize case creation when multiple users are managing a large amount of test cases. This kind of software is designed to help manage several test conditions — e.g., when you’re faced with clustered test cases or a sequenced batch of interdependent test cases. Set up training processes and support documentation for creating and managing test cases, so new employees have consistent resources and technical documentation.

Test Cases, Test Scripts, and Test Scenarios

In the field of software testing, test cases, test scripts, and test scenarios work in tandem, but they refer to different components of the discipline.

Test Case vs. Test Scenario

A test case is a document that articulates the specific conditions necessary to test the software, the building blocks to test a scenario, or the how-to. Test scenarios address the overall test requirements; for example, a scenario may anticipate how a user interacts with the software.

Using detailed documentation, test cases provide information about preconditions, the steps to take, and the expected results. Test scenarios might cover many test cases and give the overall conditions for each independent test case.

Test cases and test scenarios represent two very different aspects of testing in the following areas: the functionality of new code, the updates to an AUT feature, or a new function of the SUT.

Test Case vs. Test Script

A test script is a component of a test case. It supplies the how-to steps in a manual or automated fashion, using software code to test requirements. Test scripts change continuously, as they are designed to match new feature requirements. They are also intended to repeatedly test the same steps and do not encourage flexible, exploratory testing or creative strategies for finding bugs. The terms test case and test script are now used interchangeably. Formerly, the two terms distinguished between manual test steps (a test case) and automated test steps (a test script).

Test Case vs. Test Plan

A test case is a single component of an overall test plan. (Multiple test cases are known as a test suite.) A test plan is a test artifact, aka a project document (or deliverable). Test plans define a project's test scope, risks, deliverables, and testing environment. Using a test schedule, a test plan outlines a project’s QA activities and assigns roles, responsibilities, and test objectives to a testing team.

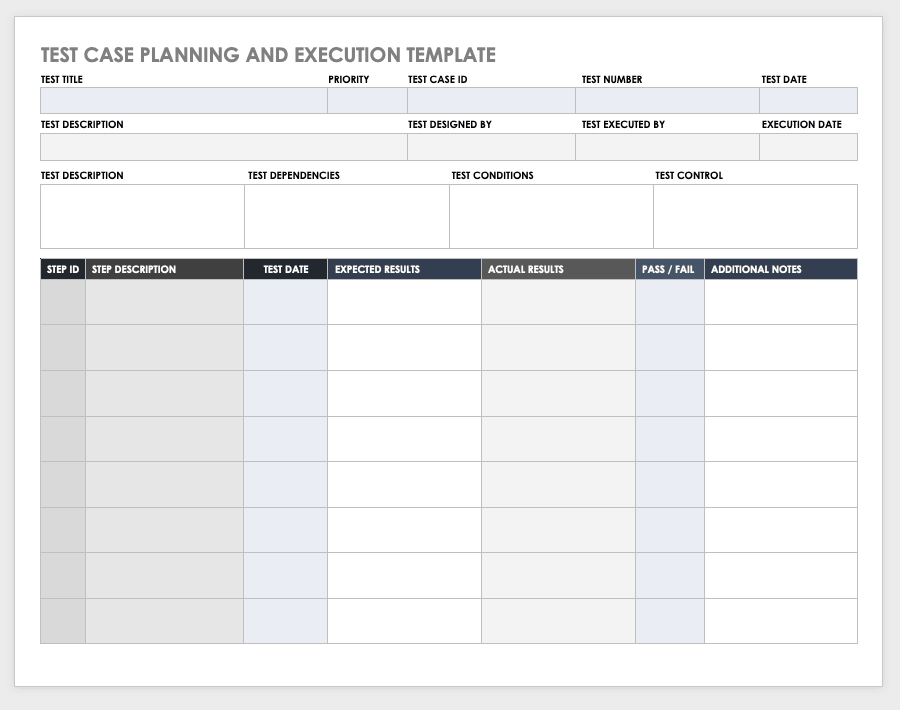

Test Case Planning and Execution Template

Use this test case planning and execution template to map out test plans for your software development project, execute test case steps, and analyze test data. It is designed to track tests by test ID and name, identify each stage of a test, add priority levels and notes, and compare actual versus expected results.

Download Test Case Planning and Execution Template

Excel | Word | PDF | Smartsheet

Test Case Management Tools and Test Automation

Testers can write their own test scripts (or code) or use test management tools to handle and automate testing. These software solutions automate test scripts and provide reporting capabilities across different requirements and different versions of the AUT.

The market for dedicated test management solutions includes vendors such as HP Quality Center, Jira, TestLodge, TestComplete, and Selenium. Some of the most commonly advertised benefits of these solutions include test case templates, test versioning, automated bug tracking with email notifications to assigned developers, controlled collaboration access, traceable test coverage by requirement, and cloud backup storage.

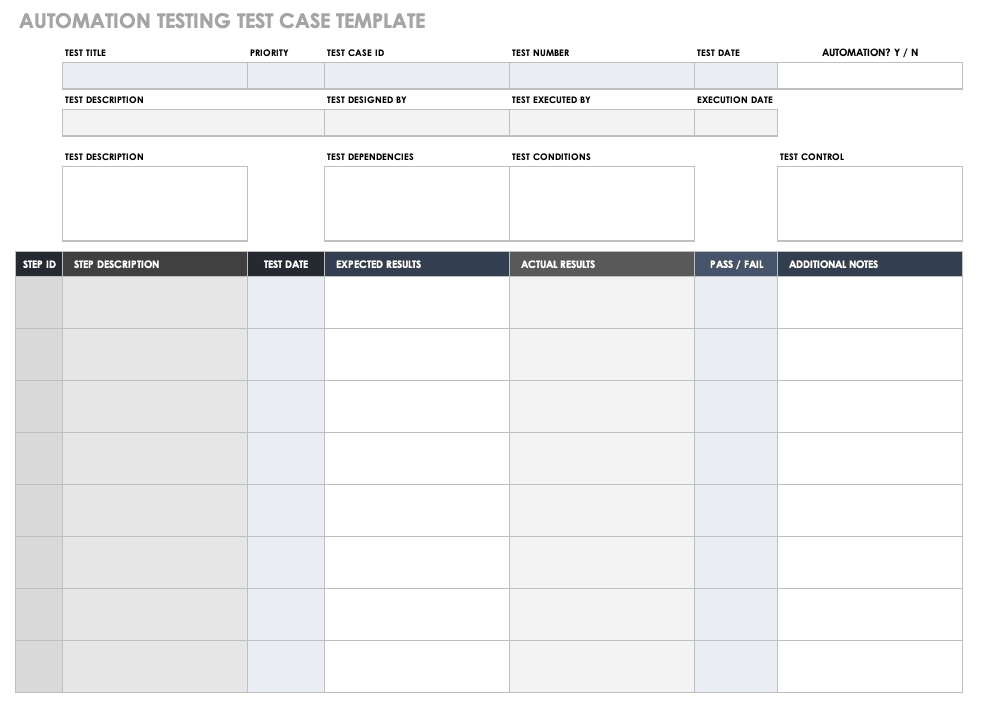

Automation Testing Test Case Template

Use this automation testing test case template to review the success or failure of automated software tests. Download and fill out this form to document the test name and ID, the test duration, each separate step and component, and any notes about the test, including the automated test scripts.

Download Automation Testing Test Case Template

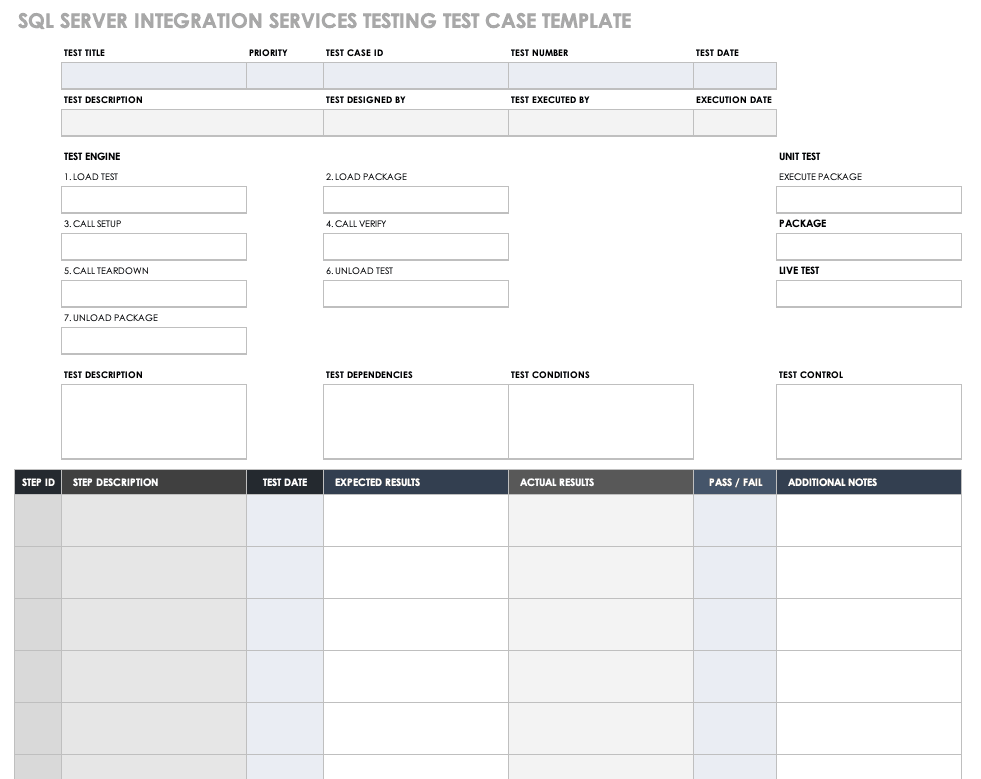

SQL Server Integration Services Testing Test Case Template

Software Requirements Specification (SRS) Document and Test Artifacts

Using test requirements derived from test artifact documents, testers write test cases and create test scripts for different scenarios. A test artifact is the by-product of the software development and planning processes that describe the function and design of the software. A test artifact might refer to use cases, requirements, or design documentation, like user interface (UI) wireframes. The development team generates this document with help from the partnering client and business stakeholders.

A software requirements specification (SRS) document provides the information for test scenarios that flow from business and project requirements. Testers create test cases according to the SRS document, so QA activity is synced with development and business workflows. Depending on the organization and testing process, test case requirements might be derived from other test artifact documents, including the following:

-

UI wireframes or screen prototypes

-

Business or user requirements document

-

Use case document

-

Functional requirements document

-

Product requirements document

-

Software project plan

-

QA/test plan

-

Help files or release notes

Test Case vs. Use Case

Use cases are different from test cases. A use case provides a path, or multiple paths, to develop software solutions. A test case validates that the solution meets a specific requirement. Use cases supply input about expectations — captured by business and user requirements in the SRS document — and test cases work with this input to validate expectations. Test scenarios depend on detailed requirements derived from the functions of the use case. Test cases can be recycled, whereas test scenarios change frequently based on use cases.

Depending on the input from a use case, different test cases reflect different functional requirements. For example, a UI test requires a test case to verify that the static Username and Password buttons display the proper text. To try out a function, the team creates a test case is to explore what happens when the user clicks the Login button. To examine data entry, the team creates a database test case to check that the system calls the right database and grants permission. If a user forgets their password, a process test checks that clicking the Forgot Password button will generate an email to reset the user’s password.

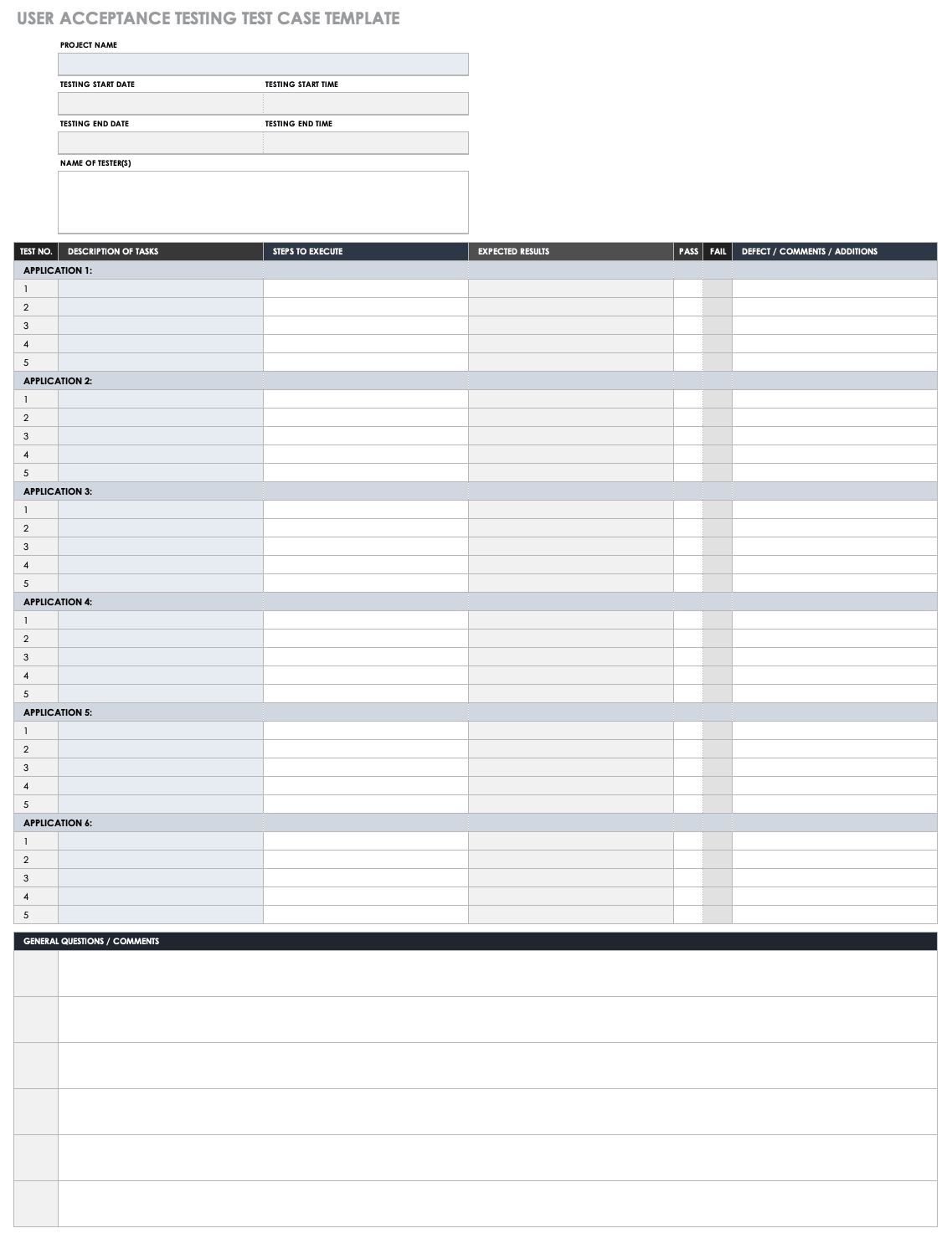

User Acceptance Testing Test Case Template

Download this comprehensive user acceptance testing test case template to ensure that it matches the SRS document and meets all provided requirements. The document is designed to track individual applications, execution steps, and expected and actual results.

Download User Acceptance Testing Test Case Template

What Makes a Good Test Case?

Useful test cases have attributes that support the scientific method. They help manage the complexities of software testing and the SDLC. The scientific method requires defining a state of testing by using known conditions and variables, executing certain behaviors in questions to test a hypothesis against specific requirements, observing system behavior, and recording the results.

A test case empowers testers to think creatively and consider different angles and approaches for verifying and validating software requirements. Promote feedback, learning, and optimization by making your test cases accessible to all stakeholders.

Properly formatted, test cases are accurate, traceable back to a single requirement, secure to maintain, repeatable, reusable, and independent of other test cases. As with published scientific method studies, peer-reviewed test cases promote accessible, open contribution from everyone involved in the SDLC and ensure proper test coverage (i.e., the correct amount of code and the correct rate of testing). Poorly constructed test cases share the following traits:

-

Broad Steps: Ineffective test cases use vague or overly broad steps, or they miss steps altogether. These gaps impact the ability to properly test a scenario or leave future users confused and testing irrelevant features. Be specific; otherwise, testers will cover only a small part of the overall functionality of the SUT or repeat only the most common use case scenarios.

-

Informal Testing: If your test case is not systematic, traceable, and accessible by all stakeholders, it is useless. Some software development and testing methodologies skip the step of writing test cases, while others require writing to drive development. Regardless of the testing method, if you create a test case, it is a formal document that requires specific inputs and preconditions, a defined testing procedure, and an expected result. Poorly constructed, informal test case documentation prevents testers from running a battery of repeatable tests on future versions of software. (Regression testing is an example of a critical repeatable test.) Informal test case documentation lacks a positive and negative test case for each requirement and subsequent requirement.

-

User Environment: Poorly constructed test cases fail to account for data dependencies or changes in the development environment. (An inadequate test case, for example, doesn’t account for a user who is already logged in.) Overly simple test case steps can misrepresent the user journey. If you create test cases without concern for special test execution steps, changes to requirements, a new version of the AUT, or updates to business use cases, they will not reflect the end user experience. Well-defined test environments, when paired with accurate data, yield more creative and thorough test case scenarios. This means being sure to include any user data dependency (e.g., that the user should be logged in, that the user should start the journey on a specific page, etc.).

-

Missing Prerequisites: If, for a particular test case, testers lack access to use case requirements or SRS documentation, then that test case will lack objectives and concision. Confusion about test data or missing preconditions leads to poorly defined test cases.Testers need access to internal customer advocates and user data to determine how to test and what to verify. Creating test cases is similar to using the scientific method of inquiry. A clearly outlined test hypothesis relies on prerequisite data that is available prior to creating test cases. Lack of clarity and convention leads to poorly constructed test case documentation and a hard-to-identify test scope.

-

Poor Documentation: Test cases written with assumptions about skill level or background knowledge omit details, so testers may miss parts of the plan or create a different result than expected. Poor documentation breeds ineffective test cases that lack impactful analysis. If you don’t clearly define the criteria for a passing result or if you take shortcuts, future testers will lack the benchmark details to determine whether a particular test passed or failed. In addition, when your test case documentation lacks a clear scope (i.e., reusable test keywords or test script functions), you compromise the ability to automate future tests and differentiate from other test cases.

Efficient Test Case Documentation

Efficient test case documentation begins prior to the creation of test cases. It starts with the collection of test requirements.

First, perform a line-by-line review of the test artifact documents. Gather requirements that are clear (i.e., those for which you understand the expectation of the feature or function described) and testable (i.e., those for which you can test and predict an expected result). Focus on identifying the keywords and phrases that accurately describe these requirements and make notes on the elements that are unclear. By focusing on the requirement gathering phase and pre-test conditions, your test coverage is likely to be more complete. You must have data for positive and negative test cases in order to create a test case. Be sure to gather both from test artifacts.

Next, check for standardized test case documentation. Review existing test project files for test cases that apply to the requirements you gather. When creating new test cases, focus on reusable, easy-to-maintain standards and create generic steps to ensure that new data variables can be replaced when a similar test case is required in the future.

A significant amount of test case management involves feature enhancements and changes to existing functionality for web-based applications prone to requirement changes. Efficient documentation empowers a standardized approach to test cases that address common test scenarios and concepts involving website features. Browser compatibility testing is one example of a common test scenario.

What Is a Test Case in Manual Testing?

Manual testing is software testing, but the term is employed to distinguish between the practice of automating testing using test scripts (software code) and the practice of using test management tools with automation features. A test case is a document you create manually to support automated software testing practices.

By manually collecting a set of test data (preconditions), determining expected results (postconditions), and developing a test case to verify specific requirements, testers have a starting point for automated test execution.

For example, the functional flow of the manual test steps for a test case might follow the basic, alternate, or exception flow approach to test the login page of a web application:

-

Basic Test: Enter the login credentials for the web application using multiple browsers (Internet Explorer, Safari, Chrome).

-

Alternate Test: Enter the login credentials for the web application using mobile devices with different screen resolutions.

-

Exception Test: Enter the wrong login credentials to test if the user receives the error message on different operating systems (Windows, MacOS, Linux).

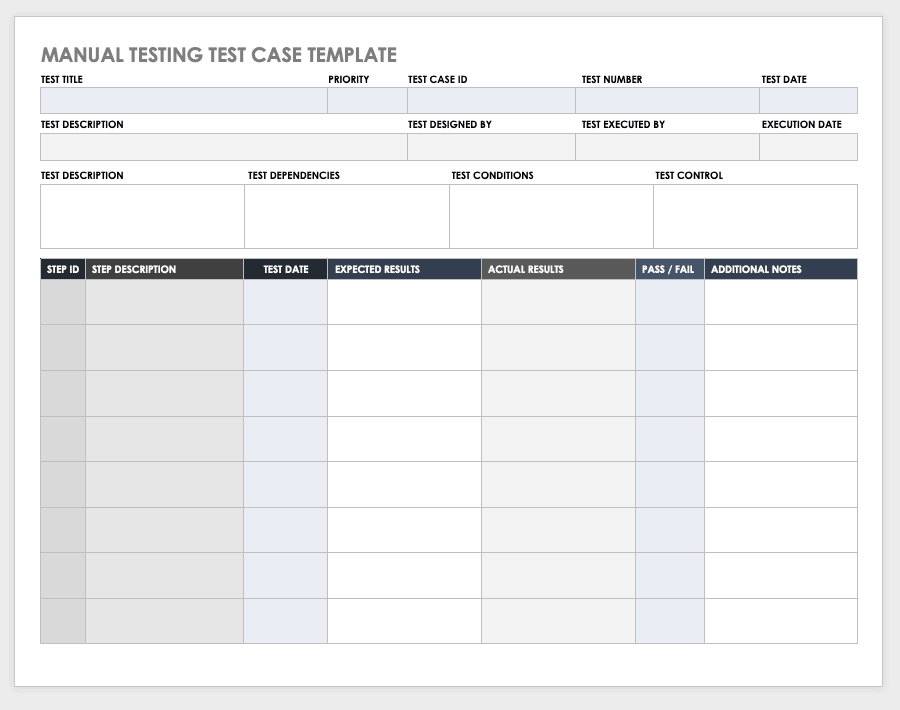

Manual Testing Test Case Template

Use this manual testing test case template to record testing steps, analyze expected results versus actual results, and determine a pass/fail result. It is designed to manually record each step of the testing process, the test ID and name, and additional notes to consider during analysis.

Download Manual Testing Test Case Template

How to Write a Test Case in Microsoft Excel

This section is a step-by-step guide on creating a test case using Microsoft Excel 2016 (Office 365 edition). There is no right or wrong tool for test case management, but spreadsheets are the traditional choice.

Regardless of how you choose to document and administer your test cases, either via a test management solution or project management collaboration tool, it is a good idea to use a standardized template for efficiency and consistency. This tutorial is based on the manual testing test case template .

-

Test Identification and Traceability: Open the Excel spreadsheet template and click the Test Title cell. Name the test case based on the function, feature, or component you’re testing. Keep the title short and specific; a brief, precise title is important for traceability and communication. Next, click the Test Case ID cell and use the following format: TC_Test Title_Test Number. For example, if you are testing a web application login screen, the first test might have the following Test Case ID: TC_Login_01.

Note: Each Excel sheet reflects one complete, independent test case, so you can copy the Test Title to name each separate sheet of the workbook. -

Test Priority: Select the test priority from the Priority drop-down menu cell (Low/Medium/High). This categorization is useful during test execution and for test coverage. You determine the categorization based on the business requirements found in the test artifact documents and the type and level of testing activity you use. For example, if you create a test case for nonfunctional requirements, it might have a Low priority compared to functional business requirements that carry a High priority.

-

Test Setup and Data: With the end user in mind, enter the information on what the test seeks to confirm or the functional test data (which describes the test requirement) in the Test Description cell. Next, add nonfunctional data, such as AUT version, relevant data files, type of operating system, device hardware, and browser type, to the Test Dependencies cell. Then, in the Test Conditions cell, enter the information on other software modules or domain-specific test cases impacted by the test. Or, if applicable, enter the alternative testing techniques you used (for example, equivalence partitioning, error guessing, or transition state testing). Finally, enter your name in the Test Executed By cell and enter the date in the Execution Date cell.

-

Execution Steps and Observations: Detail the test activity in sequential steps by first entering the step number in the Step ID cell. Next, using the Step Description cell, enter the specific details of the test actions. (When combined in sequence, these details constitute the test script). For example, you would write “Enter username” or “Enter password.” Be transparent. Write each step in clear and simple terms, using active verbs. Write the steps in the proper order. Remember, these steps are atomic — they stand alone in sequence without any overlap of steps with another requirement or subfunction.

Then use the Test Data cell to include any relevant data for that specific step, such as an active email address or input values. Enter the Expected Result. Next, enter the Actual Result of the test step in the corresponding cells. Finally, enter the Pass/Fail status in the cell and make any additional comments regarding that step in the Additional Notes cell.

Manual Test Case Example

The following example is based on the previous tutorial and manual testing test case template. This scenario is designed to showcase the concepts discussed in this article for creating a test case spreadsheet document using Microsoft Excel. The test requirement is employed to verify that a user will receive an email to their registered account that includes a temporary link to reset their account password.

-

Test Title: Account Login Password Reset

-

Test Case ID: TC_Reset Password_01

-

Priority: High

-

Test Description: Verify that an email with a temporary password reset link is sent to the registered email address.

-

Test Dependencies: Valid email account

-

Test Condition: Functional requirement

- Test Step 1:

- Additional Notes: Google Chrome browser

- Pass/Fail Status: N/A

- Actual Result: N/A

- Expected Result: N/A

- Step Data: Login page URL

- Step Description: Navigate to login page

- Test Step 2:

- Additional Notes: Google Chrome browser

- Pass/Fail Status: Pass

- Actual Result: Email received

- Expected Result: Email with password reset link is sent to valid email address

- Step Data: Valid email address

- Step Description: Submit registered account email address

- Test Step 3:

- Additional Notes: Google Chrome browser

- Pass/Fail Status: Pass

- Actual Result: Please enter new password and confirm password

- Expected Result: Click to follow reset password link

- Step Data: N/A

- Step Description: Verify that the user navigates to reset password web portal

- Test Step 4:

- Additional Notes: Google Chrome browser

- Pass/Fail Status: Fail

- Actual Result: Navigates back to account login page

- Expected Result: Confirmation that password is reset

- Step Data: Password must be 8 characters or more

- Step Description: Verify new password is submitted for registered account

- Test Step 1:

This test case only uses examples for positive results. A test case should always account for positive and negative outcomes. For more information on negative test cases, see this article on negative test cases.

Improve Your Test Cases with Smartsheet for Software Development

Empower your people to go above and beyond with a flexible platform designed to match the needs of your team — and adapt as those needs change.

The Smartsheet platform makes it easy to plan, capture, manage, and report on work from anywhere, helping your team be more effective and get more done. Report on key metrics and get real-time visibility into work as it happens with roll-up reports, dashboards, and automated workflows built to keep your team connected and informed.

When teams have clarity into the work getting done, there’s no telling how much more they can accomplish in the same amount of time. Try Smartsheet for free, today.